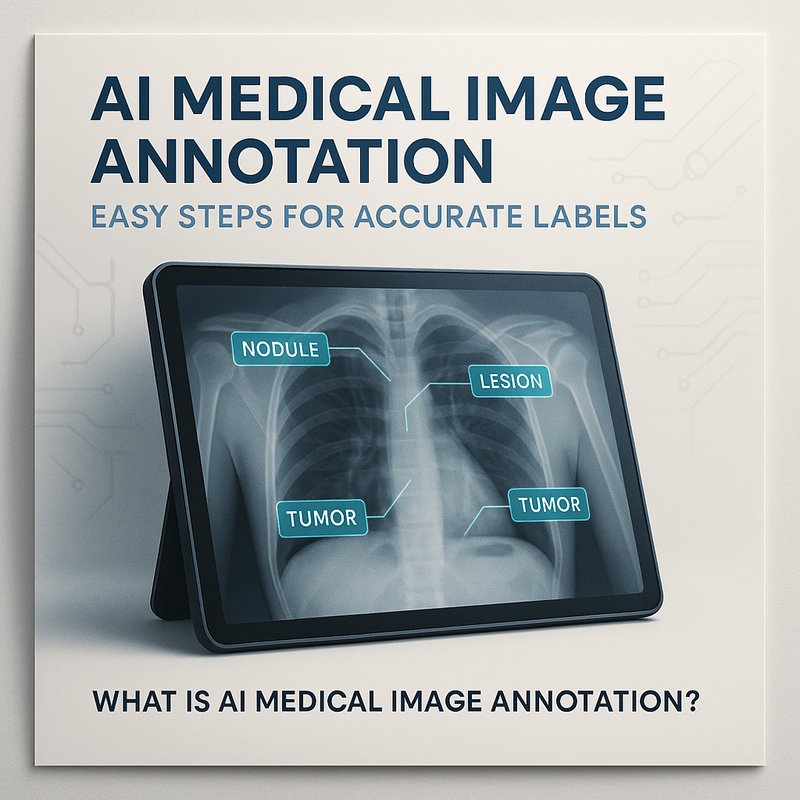

In the world of medical research and diagnosis, having high‑quality labeled images is critical. AI medical image annotation lets teams label thousands of X‑rays, MRIs, or CT scans faster and more accurately than manual methods. This guide explains what it is, why it matters, the tools you can use, and how to build a smooth workflow that keeps patient data safe.

What Is AI Medical Image Annotation?

AI medical image annotation uses artificial intelligence models to suggest regions, objects, or features inside medical scans. Think of it as an assistant that draws bounding boxes, outlines tumors, or marks organs with a few clicks. The human reviewer then confirms or tweaks the suggestions, saving hours of repetitive work.

Key benefits:

- Speed: AI can process dozens of images per second, cutting labeling time from days to minutes.

- Consistency: Machine‑generated tags follow a single standard, reducing variability that comes from different annotators.

- Scalability: You can expand the dataset without hiring more annotators.

Why Accurate Labels Matter in Healthcare

-

Training Better AI Models

Machine‑learning models for cancer detection, fracture identification, or disease segmentation all rely on labeled data. A mislabelled image can mislead a model, leading to false positives or missed diagnoses. -

Regulatory Compliance

Regulatory bodies like the FDA require transparent, traceable datasets. AI‑assisted annotation tools often log every edit, making audit trails easier to build. -

Research and Discovery

Researchers use annotated datasets to discover biomarkers, track disease progression, or develop new imaging protocols. Accuracy directly impacts the validity of findings.

Popular Tools for AI Medical Image Annotation

| Tool | Core Feature | How It Helps with AI Annotation |

|---|---|---|

| Label Studio | Open‑source labeling interface | Integrates with pre‑trained models for auto‑suggestions |

| CVAT | Web‑based annotation tool | Supports GPU‑accelerated inference for quick feedback |

| Scribble-Anything | Fine‑tuning Segment Anything | Lets you train on a small sample set for custom organs |

| Microsoft Detectron2 | Object detection framework | Pre‑trained on medical datasets for rapid deployment |

| Neura Artifacto | Multi‑modal chat interface | Generates annotation prompts and refines model outputs |

Choosing the right combination depends on data type (2D vs 3D), privacy requirements, and team size.

Building a Smooth Annotation Pipeline

Below is a practical, step‑by‑step workflow you can follow in less than an hour of setup.

1. Gather and Protect the Data

- Collect the imaging files (DICOM, NIfTI, JPEG).

- Mask patient identifiers and encrypt the data before upload.

- Store in a secure, access‑controlled bucket or on‑prem server.

2. Pre‑process Images

- Convert to a standard format if needed.

- Normalize intensity values so the model sees consistent inputs.

- Resize or crop images to fit the AI model’s expected size.

3. Run the AI Annotation Engine

- Load the model (e.g., Segment Anything fine‑tuned on your dataset).

- Batch‑process images; the AI outputs bounding boxes or segmentation masks.

- Save the suggestions in JSON or COCO format.

4. Review and Edit in a GUI

- Open the annotations in Label Studio or CVAT.

- Annotators quickly adjust boundaries, merge duplicates, or delete false positives.

- Export the final annotations to your training set.

5. Validate Quality

- Use a small validation set to compute IoU (Intersection over Union) metrics.

- Check that all critical organs or lesions are correctly labeled.

- Iterate: feed the corrected data back to fine‑tune the model, reducing future effort.

6. Integrate into Your ML Pipeline

- Load the annotated dataset into PyTorch, TensorFlow, or any framework you’re using.

- Train your segmentation or classification model with confidence.

- Monitor performance on hold‑out sets to detect drift early.

Best Practices for Reliable AI Medical Annotation

| Practice | Why It Matters | How to Apply |

|---|---|---|

| Human‑in‑the‑Loop | Machines are great, but clinical judgment is gold. | Always have a certified radiologist confirm final labels. |

| Bias Auditing | Unequal representation can lead to unfair predictions. | Audit label distribution across demographics before training. |

| Version Control | Medical data changes over time; you need reproducibility. | Tag each annotation round with a version number. |

| HIPAA / GDPR | Legal compliance protects patients and institutions. | Encrypt data at rest and in transit; use anonymized IDs. |

| Continuous Feedback | Models improve with fresh data. | Create a feedback loop where new cases are automatically sent back for annotation. |

Real‑World Success Stories

- CityHealth Hospital used AI medical image annotation to double the size of its chest‑X‑ray dataset in two weeks, reducing the time to deploy a pneumonia detector from 3 months to 6 weeks.

- NeuroLab Research Group employed a Segment Anything model to annotate 10,000 MRI slices in one day, enabling a new study on early‑stage Alzheimer’s that would have taken a team of 4 annotators 2 months.

- PharmaCo integrated automated annotation into its pipeline, cutting the cost per annotated scan by 70% while maintaining FDA‑required audit logs.

Integrating Neura AI Tools into Your Annotation Workflow

Neura’s ecosystem offers seamless connectors that fit naturally into the annotation pipeline:

| Neura Tool | How It Helps |

|---|---|

| Neura ACE | Generates custom prompts to fine‑tune your segmentation models; automates model training scripts. |

| Neura Artifacto | Lets annotators chat with the model, ask for clarifications, and get instant suggestions in the interface. |

| Neura Router | Routes requests to the best model provider (OpenAI, Anthropic, etc.) for your annotation tasks. |

| Neura TSB | Provides real‑time transcriptions if you’re annotating video‑based imaging (e.g., ultrasound). |

Example:

Start with Neura ACE to auto‑generate a fine‑tuning pipeline for Segment Anything on your DICOM set. Run the job, then let Neura Artifacto walk your annotators through the UI, making corrections on the fly. Finally, use Neura Router to switch to a more powerful GPU cluster when batch processing spikes.

Future Directions in AI Medical Image Annotation

-

3‑D Volumetric Annotation

Models will begin to segment full volumes, not just 2‑D slices, reducing the need for slice‑by‑slice labeling. -

Multimodal Fusion

Combining CT, MRI, and PET data within a single AI pipeline will provide richer context for annotations. -

Self‑Supervised Learning

Pre‑training on unlabeled scans can drastically lower annotation needs, letting you generate pseudo‑labels that the AI refines over time. -

Explainable AI in Annotation

Future tools will highlight which image features led to a label, helping clinicians trust AI suggestions.

Takeaway

AI medical image annotation is no longer a niche concept; it’s a practical solution that speeds up research, improves diagnostics, and keeps patient data protected. By combining the right models, tools, and best practices, you can build a pipeline that delivers high‑quality labels in minutes, not days.

Whether you’re a radiology department, a research lab, or a biotech startup, adopting AI annotation now will give you a competitive edge in AI‑driven healthcare.